WoS Document Type | Records | |

|---|---|---|

n | pct | |

article | 5,434,906 | 82.24 |

review | 510,322 | 7.72 |

editorial material | 266,705 | 4.04 |

meeting abstract | 117,136 | 1.77 |

letter | 113,874 | 1.72 |

book review | 67,571 | 1.02 |

correction | 67,271 | 1.02 |

news item | 11,983 | 0.18 |

retraction | 8,290 | 0.13 |

biographical-item | 6,516 | 0.10 |

Other* | 3,669 | 0.06 |

total | 6,608,243 | 100.00 |

*Includes cc meeting heading, poetry, expression of concern, reprint, art exhibit review, item withdrawal, film review, bibliography, fiction, creative prose, theater review, record review, music performance review, software review, music score review, hardware review, tv review, radio review, dance performance review, excerpt, database review, data paper, note, and script. It should be noted that TV Review, Radio Review, Video Review were retired as document types and are no longer added to items indexed in the WoS Core Collection. They are still usable for searching or refining/analyzing search results. | ||

This work is currently under review. Please cite this working paper as:

Mongeon, P., Hare, M., Riddle, P., Wilson, S., Krause, G., Marjoram, R., & Toupin, R. (2025). Investigating Document Type, Language, and Publication Year and Author Count Discrepancies Between OpenAlex and the Web of Science [Working paper]. Quantitative Science Studies Lab. https://qsslab.github.io/wos-openalex/index.html

Abstract

Bibliometrics, whether used for research or research evaluation, relies on large multidisciplinary databases of research outputs and citation indices. The Web of Science (WoS) was the main supporting infrastructure of the field for more than 30 years until several new competitors emerged. OpenAlex, a bibliographic database launched in 2022, has distinguished itself for its openness and extensive coverage. While OpenAlex may reduce or eliminate barriers to accessing bibliometric data, one of the concerns that hinders its broader adoption for research and research evaluation is the quality of its metadata. This study aims to assess metadata quality in OpenAlex and WoS, focusing on document type, publication year, language, and number of authors. By addressing discrepancies and misattributions in metadata, this research seeks to enhance awareness of data quality issues that could impact bibliometric research and evaluation outcomes.

Introduction

Bibliometrics has been used in research and data-driven research evaluation for approximately half a century (Narin, 1976), originally supported by the development of the Web of Science1 (WoS), which was conceptualized in 1955 and launched in 1964 (Clarivate, n.d.). It took more than 30 years for other players to emerge: Elsevier’s Scopus2 was founded in 1996, Crossref3 in 1999, Google Scholar4 in 2004, Microsoft Academic (now integrated into OpenAlex) and Dimensions5 in 2018, and OpenAlex in 20226.

The value of bibliographic data sources is derived from different elements, including their coverage, completeness, and data accuracy (Visser et al., 2021)and may be contingent on the intended use. Major proprietary databases like Scopus and WoS are widely regarded as high-quality sources (Singh et al., 2024), but they are certainly not free of errors. Past studies have identified issues such as DOI duplication (“identity cloning”) and inaccurate indexing of citations (Franceschini et al., 2015; Franceschini et al., 2016b, 2016a). Álvarez-Bornstein et al. (2017) found 12% of sampled WoS records lost funding data and showed inconsistencies across subfields. Maddi & Baudoin (2022) reported 8% of papers from 2008–2012 had incomplete author-affiliation links, improving to under 1.5% after database updates. Liu et al. (2018) noted over 20% of missing author addresses in WoS, varying by document type and year. Liu et al. (2021) outlined four causes of discrepancies between Scopus and WoS: differing publication date policies, document omissions, duplicate entries, and metadata errors. Donner (2017), for example, found document type discrepancies between WoS and journal websites or article full text for a sample of 791 publications, with letters and reviews particularly affected.

The advent of OpenAlex, an open database that indexes over 250 million scholarly works with broader coverage of the Humanities, non-English languages, and the Global South than traditional indexes (Priem et al., 2022) generated a new wave of studies aimed at better understanding this new source and assessing its suitability for bibliometric analyses and research evaluation. Many of these studies compared the coverage of OpenAlex to that of more established databases. Culbert et al. (2024) investigated the coverage of reference items between OpenAlex, WoS, and Scopus. They found that OpenAlex was comparable with commercial databases from an internal reference coverage perspective if restricted to a core corpus of publications similar to the other two sources, though it lacked cited references. Alonso-Alvarez & Eck (2024) also found missing references. OpenAlex was found to lack funding metadata (Schares, 2024) and institutional affiliations (Bordignon, 2024; Zhang et al., 2024), but to have higher author counts compared to WoS and Scopus (Alonso-Alvarez & Eck, 2024). Haupka et al. (2024) observed that a broader range of materials were classified as articles in OpenAlex compared to Scopus, WoS, and PubMed, potentially explained by the OpenAlex’s reliance on Crossref’s less granular system of classification (Ortega & Delgado-Quirós, 2024). At the journal level, Simard et al. (2025) and Maddi et al. (2024) found that OpenAlex provides a higher and more geographically balanced coverage of open access journals compared to WoS and Scopus. Céspedes et al. (2024) also found that OpenAlex had a broader linguistic coverage (75% English) than WoS (95% English). They also identified discrepancies in OpenAlex’s language metadata, noting that approximately 7% of articles were incorrectly classified as English, mainly due to the algorithmic detection of language from the title and abstract metadata in OpenAlex.

OpenAlex’s openness and broad coverage of the scholarly record have likely contributed to its fast adoption as a data source for scientometric research. There is evidence that it is indeed suitable in certain cases, such as large-scale, country-level analyses (Alperin et al., 2024). However, there is some warranted resistance to its adoption for formal research evaluation purposes given its known imperfections such as partial correctness or erroneous metadata (Haunschild & Bornmann, 2024). The Centre for Science and Technology Studies (CWTS) was, to our knowledge, the first to make such a use of OpenAlex. Since 2023, they have been using it to produce the open edition7 of their now well-established CWTS Leiden Ranking.

The CWTS Leiden Ranking Open Edition employed several strategies to mitigate the limitations of the OpenAlex database and the risks associated with its use. It was limited to approximately 9.3 million articles and reviews in English, published in a set of core, international journals and citing or cited in other core journals (see Eck et al., 2024 and the ranking website for more details). The objective was to reproduce the WoS-based Leiden Ranking as closely as possible. As such, the open ranking does not take much advantage of the more extensive coverage of OpenAlex. In their blog post, Eck et al. (2024) provide some insights on the publications included in the two versions of the ranking. They indicate that 2.5 million publications are included only in the Open Edition, in most cases (1.7 million publications) because they are not covered in WoS. On the other hand, 0.7 million publications are only included in the WoS-based ranking, explained by publication year discrepancies between the two databases, leading to some articles being included in one ranking but not the other, and by the missing institutional affiliations in OpenAlex records (Eck et al., 2024). The challenges of determining the publication year of an article were also previously discussed by Haustein et al. (2015).

Because of the large overlap in the data used to produce the two rankings, they produced similar outcomes, with the usual suspects appearing at the top of the ranking. Yet, there were some differences with some universities moving up or down by a few ranks. For example, Dalhousie University ranked #15 for Canadian universities in the WoS version of the ranking, and #13 in the OpenAlex version. Being presented with two different rankings that used the same process but provide different outcomes due to their data sources may lead to the dilemma of Segal’s law, according to which “A man with a watch knows what time it is. A man with two watches is never sure” (Wikipedia contributors, 2024).

Research objectives

Our study was partly inspired by the launch of the CWTS Leiden Ranking Open Edition and its differences with the WoS-based edition. However, our intent is not to compare the two rankings and explain the differences in their outcome, but to compare metadata records for the subset of publications that are indexed in both OpenAlex and WoS, with a focus on describing discrepancies and measuring their frequency. We do not consider WoS as a gold standard against which OpenAlex records can be tested for their quality. Instead, we consider each database as a benchmark for the other.Our research questions are as follows:

- RQ1: How frequent are discrepancies between WoS and OpenAlex records in terms of document type, language, publication year, and number of authors?

- RQ2: What share of records with discrepancies are correct in WoS, OpenAlex, neither, or both?

- RQ3: What explains these discrepancies?

The choice of the metadata elements selected for the study (document type, language, publication year, and number of authors) was guided by their use as factors for inclusion in the CWTS Leiden Ranking: articles and reviews (document type) in English (language) published in a three-year period (publication year). The same metadata are also relevant in the production of normalized indicators for bibliometric research more generally. Indeed, normalized indicators would typically aim to compare publications of the same type, published in the same year. Additionally, the number of authors tends to be the denominator when counting publications using the fractional counting method (also provided in the Leiden Ranking). Therefore, discrepancies in these metadata elements of publication records are more likely to have a direct effect on the outcome of bibliometric analysis.

To our knowledge, our study is the first to compare the metadata quality of the subset of publications indexed in both OpenAlex and WoS. Our findings may offer useful insights to those using or planning to use OpenAlex, and may also point to possible paths to correct matadata errors, which could be helpful for database providers. Our intent is not to establish best practices or to formulate recommendations for database creators or users, nor to determine which database is better than the other. Our study is solely motivated by the desire to contribute to a better understanding of our data sources.

Data and methods

Data collection

The WoS data used in this study were retrieved from a relational database version of the WoS hosted by the Observatoire des sciences et des technologies (OST) and limited to the Science Citation Index (SCI), the Social Sciences Citation Index (SSCI), and the Arts & Humanities Citation Index (A&HCI). We collected all WoS records with a DOI published between 2021 and 2023 (N = 7,661,474). We removed 30,3094 (0.4%) WoS records with multiple document types to avoid complications with the analysis. Of the remaining 7,631,080 WoS records, 6,599,479 (86.5%) had a DOI match in the February 2024 snapshot of OpenAlex accessed through Google Big Query (see Mazoni & Costas, 2024). Conversely, we collected all OpenAlex records with a DOI and a publication year between 2021 and 2023 (N = 26,942,411), and found 13,618 additional matches to WoS records published outside of the 2021-2023 period, for a total of 6,613,097 records matched. We used the subset of 6,608,243 Web of Science records with a single DOI match in OpenAlex for our analysis. In the OST database, every journal is assigned to one of 143 specialties and 14 disciplines of the National Science Foundation (NSF) classification. For our analysis, we grouped the disciplines into four groups: Arts and Humanities (AH), Biomedical Research (BM), Natural Science and Engineering (NSE), and Social Sciences (SS).

Identification of discrepancies

For each matching WoS and OpenAlex record, we compared the following four metadata elements: 1) document type, 2) language, 3) publication year, and 4) number of authors.

For the document type, we did not consider discrepancies where a record is a review according to WoS and an article according to OpenAlex, and vice versa (i.e., we consider articles and reviews as the same document type). Furthermore, OpenAlex indexes conference papers as articles, and the source type (conference) is meant to distinguish them from journal articles. For these reasons, we only considered discrepancies for which the record is identified as an article or a review in either WoS or OpenAlex, but is identified as neither in the other source. We also excluded discrepancies for which the record is a meeting abstract in WoS and an article in OpenAlex. Overall, we found 429,692 discrepancies that met these criteria (6.5% of all records in the dataset).

The identification of discrepancies in language, publication year, and number of authors was limited to the subset of 5,936,235 articles and reviews with no discrepancy in document type (i.e., the records that are articles or reviews in both WoS and OpenAlex). The publication language is recorded in its long form (e.g., English) in our WoS data and its ISO code (e.g., en) in OpenAlex, so we created a list of all language combinations (e.g., English-EN, English-FR), determined which ones constitute discrepancies, and identified 33,516 discrepancies (0.6% of all articles and reviews) in publication language between WoS and OpenAlex.

The publication year and number of authors being numeric indicators, identifying discrepancies in these metadata fields is easily done by calculating the difference between the two values and flagging all non-zero values. We identified a total of 480,884 discrepancies (8.1% of all articles and reviews) in publication years and 71,133 discrepancies (1.2% of all articles and reviews) in the number of authors. We recorded them both as dichotomous variables (discrepancy vs no discrepancy) and as differences between the two values, which allowed us to measure both the frequency and the strength of the discrepancies.

We obtain the direction and extent of the discrepancies by substracting the OpenAlex publication year or number of author from the WoS values. A difference of 1 indicates that according to WoS the article was published one year (or has one more author) later than according to OpenAlex. Inversely, a difference of -1 indicates that the articles was published one year ealier (or has one fewer author) according to WoS than according to OpenAlex. We then and grouped cases in the bins (-4 or less, -2 to -3, -1, 1, 2 to 3, 4 or more).

Investigation of discrepancies

We randomly sampled a sample of discrepancies for manual investigation. For each type of discrepancies, we calculated the required sample size with a 95% confidence level and a margin of error of ±5%. The sample discrepancies were manually investigated by looking at the article on the journal’s website and the full text when necessary and available. We recorded whether the WoS or OpenAlex record was correct and, when possible, explained the discrepancy. For the publication year, it is typical for articles to have two publication dates: the date of the first online publication and the date of the publication of the issue. For cases in which the online publication year was recorded in one database and the issue year was recorded in the other, we did not consider either database to be correct.

For author discrepancies, the landing page and, when available, the published version was examined for author counts. Where available, author declarations, acknowledgements, and CRediT declarations were also considered to clarify authorship where groups or consortia were involved in the production of the work. For languages, the full text was the primary source, followed by the publisher’s landing page for recording observations on language assignment. We recorded multiple language full texts if available, as well as translated abstracts available on the landing page. There are limitations to using the publisher landing page with a web browser. The language encoding in the head of the HTML could be used by the GET request to display your preferred language type, if the server is configured to respond to such a request.

This process allowed us to go beyond the simple counting of differences between the databases and gain insight into the different factors that can cause discrepancies and estimate the percentage of erroneous records in each database. One caveat, of course, is that because our process relies on discrepancies in identifying errors, we are not considering cases where both databases contain the same error.

Results

We structured our results section by type of discrepancy. For each, we first show the distribution of values in the Web of Science and the OpenAlex datasets. Note that these are not the distributions in the entire Web of Science OpenAlex databases, but the distributions in the subset of records with a DOI match between the databases that are included in our analysis. Second, we present descriptive statistics related to the discrepancies identified and the sample that was used for investigations. Finally, we present the results of these investigations.

Document type

Document type distribution in WoS and OpenAlex

Table Table 1 and Table 2 present the distribution of records across document types in WoS and OpenAlex, respectively, to provide a general picture of the databases’ content and the differences in their classification. While WoS contains twice as many document types as OpenAlex, these differences appear mainly among the less frequent types, in line with the findings of Haupka et al. (2024). Most documents in both data sources are articles and reviews.

OpenAlex Document Type | Records | |

|---|---|---|

n | pct | |

article | 5,845,248 | 88.45 |

review | 511,686 | 7.74 |

letter | 136,051 | 2.06 |

editorial | 59,662 | 0.90 |

erratum | 48,189 | 0.73 |

retraction | 4,704 | 0.07 |

book-chapter | 1,333 | 0.02 |

preprint | 872 | 0.01 |

paratext | 307 | 0.00 |

other | 96 | 0.00 |

Other* | 95 | 0.00 |

total | 6,608,243 | 100.00 |

*Includes report, dataset, other, dissertation, supplementary-materials, and reference-entry. | ||

Table 3 and Table 4 show that the vast majority 301,850 of the 310,843 discrepancies (97%) are cases where a record is an article or review in OpenAlex but not WoS. We find that in both tables the majority of discrepancies indicate a misclassification of a record as an article or review. However, WoS is much more accurate at detecting misclassifications in OpenAlex (93.5% of the sample) than in WoS (68.8% of the sample). This generally points to a much larger number of OpenAlex records misclassified as articles or review compared to WoS.

Type in OpenAlex | Discrepancies | Sample | Errors | |||

|---|---|---|---|---|---|---|

n | pct | n | pct | n | pct | |

letter | 5,008 | 55.7 | 331 | 56.7 | 219 | 66.2 |

editorial | 1,341 | 14.9 | 87 | 14.9 | 63 | 72.4 |

book-chapter | 1,304 | 14.5 | 85 | 14.6 | 85 | 100.0 |

preprint | 840 | 9.3 | 54 | 9.2 | 14 | 25.9 |

paratext | 240 | 2.7 | 14 | 2.4 | 14 | 100.0 |

erratum | 149 | 1.7 | 8 | 1.4 | 6 | 75.0 |

other | 83 | 0.9 | 4 | 0.7 | 0 | 0.0 |

retraction | 28 | 0.3 | 1 | 0.2 | 1 | 100.0 |

book | 5 | 0.1 | 0 | 0.0 | 0 | 0.0 |

total | 8,998 | 100.0 | 584 | 100.0 | 402 | 68.8 |

Type in WoS | Discrepancies | Sample | Errors | |||

|---|---|---|---|---|---|---|

n | pct | n | pct | n | pct | |

editorial material | 161,628 | 53.2 | 350 | 54.3 | 308 | 88.0 |

book review | 67,435 | 22.2 | 146 | 22.6 | 146 | 100.0 |

letter | 30,699 | 10.1 | 65 | 10.1 | 65 | 100.0 |

correction | 19,303 | 6.4 | 40 | 6.2 | 40 | 100.0 |

news item | 11,336 | 3.7 | 23 | 3.6 | 23 | 100.0 |

biographical-item | 5,941 | 2.0 | 11 | 1.7 | 11 | 100.0 |

retraction | 3,727 | 1.2 | 7 | 1.1 | 7 | 100.0 |

other | 3,513 | 1.2 | 3 | 0.5 | 3 | 100.0 |

total | 303,582 | 100.0 | 645 | 100.0 | 603 | 93.5 |

Publication Language

Language Distribution in WoS and OpenAlex

Table 5 and Table 6 show the distribution of articles and reviews across languages in WoS and OpenAlex, respectively, limited to the top 10 most frequent languages. Unsurprisingly, the quasi totality of records are in English in both databases. It is important to note, once again, that our results are note meant to reflect the compositon of the entire databases, but only the subset of documents included in both, which expectedly underestimate the representation of non-English literature especially on the OpenAlex side. Also important to reiterate here is that we our WoS data includes only the SCI-E, SSCI, and A&HCI, and not the ESCI which would include more non-English records.

Language in WoS | Records | |

|---|---|---|

n | pct | |

English | 5,895,515 | 99.31 |

German | 14,453 | 0.24 |

Spanish | 7,969 | 0.13 |

French | 6,172 | 0.10 |

Chinese | 3,915 | 0.07 |

Russian | 1,978 | 0.03 |

Portuguese | 1,526 | 0.03 |

Polish | 873 | 0.01 |

Japanese | 773 | 0.01 |

Turkish | 580 | 0.01 |

Other | 2,481 | 0.04 |

Total | 5,936,235 | 100.00 |

Aside from the dominance of English in the dataset, we also observe differences in the languages that compose the top 10 in each database, as well as in their ranking. Spanish, French, and Portugese are much more prevalent in the OpenAlex records than in the WoS. On the other hand, Chinese is the 5th most prevalent language in the WoS data, but does not make the top 10 in OpenAlex. Since these are supposed to be the same set of records, these differences point to significant discrepancies in the language between the two databases.

Language in OpenAlex | Records | |

|---|---|---|

n | pct | |

English | 5,886,864 | 99.17 |

Spanish | 13,777 | 0.23 |

German | 13,244 | 0.22 |

French | 10,466 | 0.18 |

Portugese | 3,203 | 0.05 |

Polish | 1,058 | 0.02 |

Turkish | 909 | 0.02 |

Hungarian | 841 | 0.01 |

Croatian | 591 | 0.01 |

Romanian | 537 | 0.01 |

Other | 3,653 | 0.06 |

Unknown | 1,092 | 0.02 |

Total | 5,936,235 | 100.00 |

Language Discrepancies

For this part of the analysis, we focus on articles and review that are in English in Web of Science or in OpenAlex, and in a language other than English in the other database. In other words, we do not investigate discrepancies between Portuguese and Spanish or French and German, but only discrepancies for which one of the languages is English (e.g., English-German, English-Chinese).

Table 7 and Table 8 respectively display the number of discrepancies found for articles in English in WoS and articles in English in OpenAlex. They also display the number of records manually investigated to determine whether the discrepancies stem from mislabeling non-English content as English, as well as the result of that investigation. To understand how an incorrect assignment may have occurred, we looked at the abstracts and full text on the landing page as well as the full text when it was available. Most sampled works seem to have multi-language abstracts either on the landing page or in the PDF of the full text. Also, some articles are published in multiple language, meaning that for some of the discrepancies observed, both OpenAelx and WoS are correct.

A first observation is that WoS appears to be more accurate than OpenALex in terms of publication language. Table Only 20,086 English publications in the WoS were in another language according to OpenAlex, and in slightly less than half (48.2%) of the verified cases, the article was in fact in English.

Language in OpenAlex | Discrepancies | Sample | Errors | |||

|---|---|---|---|---|---|---|

n | pct | n | pct | n | pct | |

Spanish | 7,029 | 34.8 | 128 | 38.0 | 82 | 64.1 |

French | 5,580 | 27.6 | 101 | 30.0 | 31 | 30.7 |

Portugese | 2,111 | 10.4 | 38 | 11.3 | 23 | 60.5 |

German | 1,598 | 7.9 | 26 | 7.7 | 8 | 30.8 |

Romanian | 529 | 2.6 | 8 | 2.4 | 0 | 0.0 |

Hungarian | 520 | 2.6 | 9 | 2.7 | 9 | 100.0 |

Turkish | 464 | 2.3 | 6 | 1.8 | 5 | 83.3 |

Croatian | 400 | 2.0 | 4 | 1.2 | 0 | 0.0 |

Polish | 290 | 1.4 | 3 | 0.9 | 3 | 100.0 |

Other | 1,690 | 8.4 | 14 | 4.2 | 6 | 42.9 |

Total | 20,211 | 100.0 | 337 | 100.0 | 167 | 49.6 |

However, we notice that for some languages, such as French, German, Romanian and Croatian, the discrepancies correctly flagged articles mislabelled as English in WoS, although the sample sizes for Romanian and Croatian were quite small. On the other hand, the majority of articles labelled as English in WoS and as Spanish, Portuguese, or Turkish in OpenAlex were actually found to be in English.

Language in WoS | Discrepancies | Sample | Errors | |||

|---|---|---|---|---|---|---|

n | pct | n | pct | n | pct | |

Chinese | 3,307 | 26.6 | 105 | 31.8 | 102 | 97.1 |

German | 2,589 | 20.8 | 45 | 13.6 | 37 | 82.2 |

Russian | 1,723 | 13.8 | 49 | 14.8 | 46 | 93.9 |

French | 1,336 | 10.7 | 32 | 9.7 | 32 | 100.0 |

Spanish | 1,200 | 9.6 | 20 | 6.1 | 18 | 90.0 |

Japanese | 620 | 5.0 | 23 | 7.0 | 23 | 100.0 |

Portuguese | 411 | 3.3 | 13 | 3.9 | 10 | 76.9 |

Turkish | 131 | 1.1 | 6 | 1.8 | 6 | 100.0 |

Polish | 92 | 0.7 | 3 | 0.9 | 3 | 100.0 |

Other | 1,036 | 8.3 | 34 | 10.3 | 33 | 97.1 |

Total | 12,445 | 100.0 | 330 | 100.0 | 310 | 93.9 |

Publication year

Publication Year Distributions in WoS and OpenAlex

Now turning our attention to discrepancies in publication years for articles in WoS and OpenAlex, we first present a statistical summary of the publication year in OpenAlexc and WoS (Table 9).

Database | n | mean | sd | var | q1 | median | q3 | min | max | skew | kurtosis |

|---|---|---|---|---|---|---|---|---|---|---|---|

OpenAlex | 5,936,235 | 2,021.904 | 0.952 | 0.905 | 2,021 | 2,022 | 2,023 | 1,915 | 2,025 | -7.433 | 537.606 |

WoS | 5,936,235 | 2,021.969 | 0.820 | 0.673 | 2,021 | 2,022 | 2,023 | 1,998 | 2,023 | -0.055 | -0.475 |

Publication Year Discrepancies

We observe a high prevalence of publication year discrepancies in articles and reviews in the WoS and OpenAlex, with 470,107 cases identified (see Table 10). We obtain the direction and extent of the discrepancies by substracting the OpenAlex publication year from the WoS publication year. A difference of 1 indicates that according to WoS the article was published one year later than accoridng to OpenAlex. Inversely, a difference of -1 indicates that the articles was published one year ealier accoring to WoS than according to OpenAlex.

Difference in publication year (WoS - OpenAlex) | Discrepancies | Sample | ||

|---|---|---|---|---|

n | pct | n | pct | |

-2 to -3 | 2,549 | 0.5 | 20 | 5.1 |

-1 | 58,526 | 12.2 | 38 | 9.6 |

1 | 392,681 | 81.7 | 276 | 70.1 |

2 to 3 | 23,166 | 4.8 | 20 | 5.1 |

< -3 | 2,069 | 0.4 | 20 | 5.1 |

Total | 480,884 | 100.0 | 394 | 100.0 |

Explanation for Publication Year Discrepancies

From our investigation of the discrepancies, we observed four general possible publication year that one record can have in either database:

- Issue year: the year of the journal issue in which the article is officially published. This is also the year included in the reference to the article.

- First publication year: the year in which the article was first published online before it was assigned to an issue.

- Other year: another year that is recorded in the article’s history but is not a publication year (e.g., submission year, accepted year).

- Source unclear: we use this code when the publication year in the WoS or OpenAlex record does not match any of the years listed in in the landing page or the PDF.

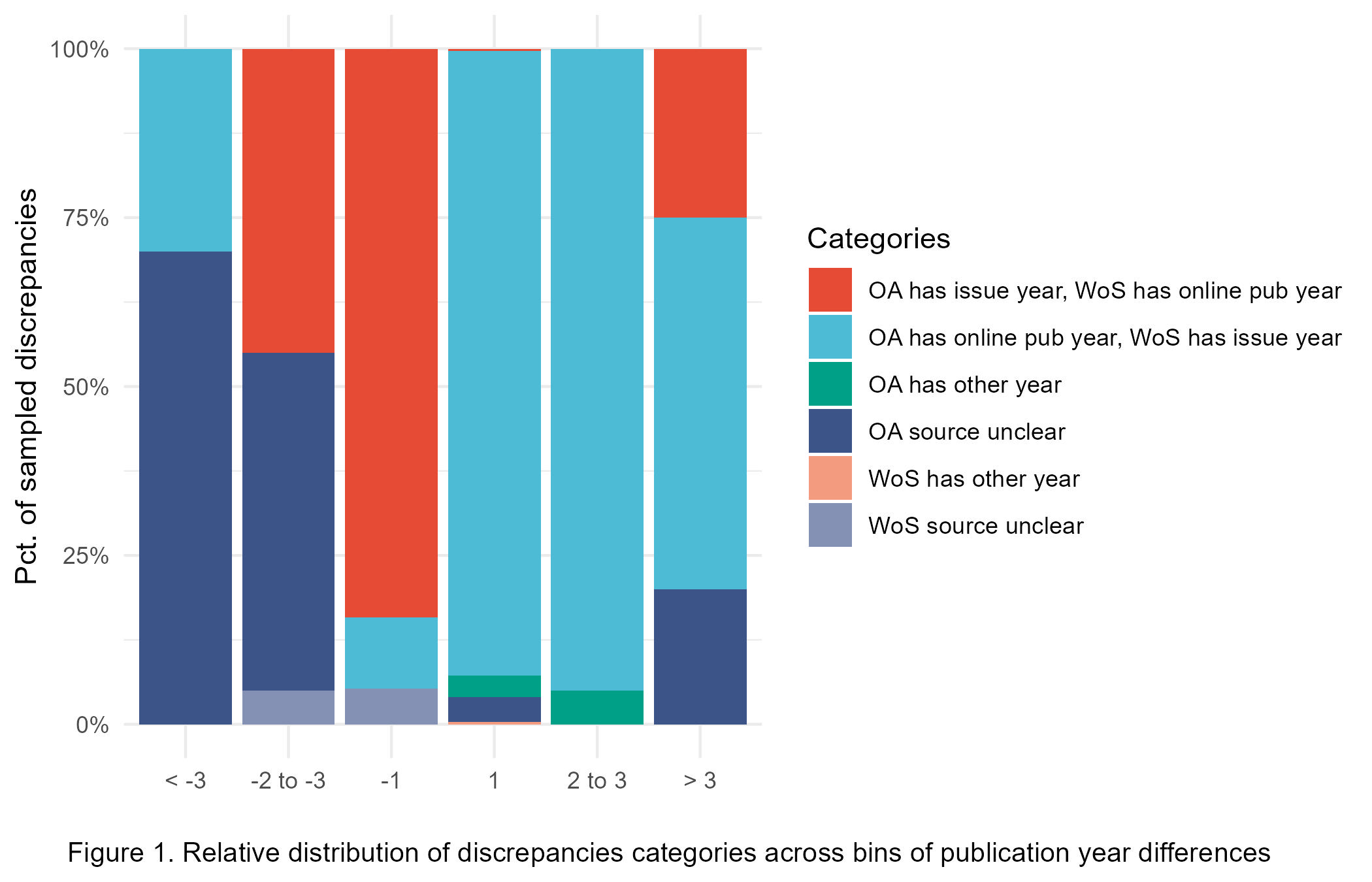

We used these four scenarios to group publication year discrepancies between WoS and OpenAlex into six categories. The first two are 1) OA has first year, WoS has issue year and 2) OA has issue year, WoS has first year. In these cases, we considered both databases to be correct because both dates are actually referring to a publication event. The following four categories indicate errors in one of the databases that include none of the two accepted publication years: 3) OA has other year, 4) OA source unclear, 5) WoS has other year, and 6) WoS source unclear.

As shown in Figure 1, erroneous publication years are rare overall in the sample that we analyzed and mostly occur when there are significant discrepancies between the WoS and OpenAlex publication years. However, these errors appear to be more prevalent in OpenAlex. For half of the sampled articles published 2 or 3 years later according to OpenAlex compared to WoS, the source of the OpenAlex publication year was not found on the article landing page or PDF and appeared to be wrong. This was also the case for 20% of the articles with a publication year in OpenAlex three or more years earlier than in the WoS. In contrast, we only found a few instances (four in total) where the WoS publication year appeared to be erroneous.

Figure 1 shows that discrepancies for which the OpenAlex year is prior to the WoS year are largely explained by cases where OpenAlex has the first publication year and WoS has the issue year, especially when the discrepancy is small (one year). This is expected given that the first publication year is typically before the journal issue year. Inversely, most cases in which the WoS publication year is prior to the OpenAlex publication year are explained by cases where WoS has the first publication year and OpenAlex has the journal issue year. Interestingly, neither database appears to be systematic in the way it indexes the year of first publication or the year of the journal issue.

Discussion and conclusion

Summary of findings

In some regards, our findings generally offer support to past claims that metadata quality in OpenAlex has room for improvement. It is particularly the case for document types, with 300,000 cases where a publication was classified as an article or review in OpenAlex but not in WoS, and almost all manually verified instances pointing to a true misclassification in OpenAlex. This may be a cause for concern as document type classifications are critical for bibliometric research and evaluation. Document type misclassifications were also present in WoS, although they were much less frequent.

Discrepancies in publication language were also observed on both less common by an order of magnitude. In this case, we also did not identify a clear advantage for one database over the other. There were more cases of articles in English in WoS and not in OpenAlex than the other way around, however WoS was actually correct in 53% of the time. On the other hand, OpenAlex had fewer articles in English that were in another language according to WoS, but those cases tended to be true misclassifications in OpenAlex. In several instances, both databases were technically correct because we found that the article was in fact published in mutliple languages. This highlights a potential issue with metadata schema that only allow a single value for language. The language discrepancies observed may also be explained by the fact that the OpenAlex language field is algorithmically derived from the metadata (title & abstract), and that the langdetect algorithm is limited to 55 languages (Céspedes et al., 2024). The lack of standardized schemes for romanization of non-roman languages may also cause some issues not only in terms of metadata quality and the ability for algorithms to properly detect language (Shi et al., 2025).

Our investigation of publication year discrepancies highlighted that the year of first publication tended to be preferred by OpenAlex, while the use of the issue year was more frequent in WoS. However, both databases displayed inconsistencies in that regard. This challenge with the ambiguity of the publication year and the different, arguably equally valid, approaches to recording it have been previously identified and discussed by Haustein et al. (2015) and Liu et al. (2021). This is another limitation that could potentially be fixed by recording all the years in the metadata schema rather than allowing only one publication year.

Overall, we see that OpenAlex was most correct on author counts for 53.7% of the subset of works, whereas WoS was correct for 33.2% of the subset. The listing of consortia or other group identifiers on the byline was a major cause of discrepancies. While both OpenAlex and WoS have other methods for extracting authors from landing pages and full text, publisher-supplied metadata should align with the published work. There is great inconsistency in how these groups are accounted for, credit is given for authorship, how members are identified, and the language used to signify how authors work on behalf of or as part of a consortium. In some cases, the complete author list was included, but in other cases, we could not find a list of the group or consortium members. More work needs to be done to document these local practices to promote consistency in author reporting and responsibilities. We also observed consistently that OpenAlex excludes organization names as contributors, even if they are listed in the byline on the landing page and the PDF version of an article. Their process does not seem to affect singular names, such as those used in non-Western naming conventions, but it does seem to exclude consortia and other group identifiers appearing in the byline. Conversely, WoS seems to count the group author as a single author consistently.

On the accuracy and internal consistency of metadata and their implication for bibliometrics.

Sometimes there may be an absolute truth against which we can judge the accuracy of a metadata record. For example, a single-author article recorded as having any other number of authors in a database constitutes a true error and was considered as such in our analysis.

However, as we have discussed through the different sections of our analysis, a discrepancy between two records representing the same objects in two databases does not necessarily imply the presence of an error in one database or the other. This was particularly evident for publication years and author counts. This offers support to our choice not to consider one database as the gold standard against which the other is to be evaluated. While this may firmly entrench us in a perpetual instance of Segal’s law, it is arguably more reasonable to acknowledge that different databases may yield slightly different outcomes that are both valid. Of course, we are not suggesting that a database may not contain more or fewer errors and may not be considered more or less reliable than another. We are merely stating that, in some instances, discrepancies between two databases do not necessarily allow to draw firm conclusions about the superiority of one over the other in terms of reflecting the reality that they are meant to capture.

On the other hand, if and only if the database providers have clear and transparent metadata policies, then we may be able to assess a database’s internal consistency relative to these policies. In our analysis of author counts, for instance, WoS appeared to be very consistent in its treatment of group authors which were almost always counted as a single author. If that is indeed the intent, then our assessment would be that there is a high level of internal consistency. However, even if that is the case, the possibility remains that this is actually not the intended way of counting authors, in which case the metadata in WoS would be internally consistent but consistently erroneous, which is arguably worse than being wrong only some of the time.

In sum, we would argue that assessing the validity of a metadata element can only be done by comparing it to the full range of possible truth and considering the metadata consistency with the database’s internal policy. Then we can classify any metadata element into one of four categories: 1) Internally consistent and valid, 2) Internally consistent but invalid, 3) internally inconsistent but valid, and 4) internally inconsistent and invalid. In the absence of clear policies regarding specific metadata elements, it may be challenging to determine what is an issue and what is not. Differences among data sources regarding indexing coverage are, to a degree, largely understood and part of decision-making, and the choice of WoS and Scopus to be selective in the sources they cover contrasts with OpenAlex’s focus on comprehensiveness. It is up to the users to understand the tools they use and to make choices that align with the needs of their analyses (Barbour et al., 2025).

It is also important to acknowledge that database providers do not usually create metadata records from scratch but work within an ecosystem of actors and data infrastructures from which their metadata records are sourced. A lack of standardization of practices across providers and a lack of quality control at the journal or publisher level may pose important challenges to aggregators like OpenAlex and WoS. Indeed, Elsevier acknowledged metadata issues as occasional processing errors inherent to large databases (Meester et al., 2016).

Investigations of the coverage and metadata quality of databases matter because these are tools that we use to conduct bibliometric research and assessments. Are validity and consistency sufficient criteria to consider a record reliable in this context? We would argue that it is not. When calculating some of the most common bibliometric indicators, such as the normalized citation score, we want to compare an article with other articles with the same document types (comparable forms of output) that have been published in the same year (comparable time to accumulate citations). Of course, this approach is already imperfect as an article published on January 1st will be compared with articles published on December 31 of the same year (twelve months apart), but not with articles published on December 31 of the prior year (one day apart). However, these are methodological choices that are made by the bibliometrics community when designing and adopting indicators. However, dealing with alternate truths regarding the publication year of an article (e.g., first publication year vs. issue year) is not a choice, but a deviation from the intent behind the use of an indicator. This issue would perhaps be negligible if the database were internally consistent, but our findings suggest that this is not necessarily the case. More than 50,000 discrepancies in publication years occurred because WoS used the online publication year and OpenAlex used the issue year, and nearly 390,000 discrepancies for which it appears to be the reverse.

Limitations and future work

One of the main limitations of our study is its scope. We focused on four metadata elements, but the databases contain many more that we are not considering. Furthermore, we only compared OpenAlex with WoS and not with other databases, and also only considered publications published between 2011 and 2013 in either database. This provides us with a current snapshot of the databases, but no insights into the temporal dynamics of the discrepancies. We were also limited in our investigation of discrepancies in the information easily accessible on the article’s landing page and on the PDFs. OpenAlex and WoS draw much of their data from other sources, such as Crossref, which may offer some additional information that could explain some of the discrepancies observed (Eck & Waltman, 2025). Another important limitation is that the OpenAlex database is rapidly evolving and some of the observed issues may be caused by processes that have since been modified.

This study focused on the nature of the discrepancies and their frequency. We discussed the possible implications that they may have for bibliometric analyses and research evaluation, but more research will be needed to empirically determine if and how the outcomes of bibliometric analyses are impacted at different levels. We also did not investigate disciplinary differences in the discrepancies. Further research should take into account additional metadata elements, examine disciplinary differences, and assess how metadata quality issues in OpenAlex could affect institutional-level metrics and, thus, the results of institutional rankings like the open edition of the Leiden Ranking.

The Paris Conference on Open Research Information and the Barcelona Declaration on Open Research Information emphasize the need for and normalization of open research information. With the tide turning toward open data sources and researchers and institutions embracing OpenAlex and other open data sources and tools, more research will be needed on the quality and coverage of OpenAlex and the other data sources it depends on. This carries implications for OpenAlex, as they look to the research community for feedback on necessary improvements to their metadata, as well as for those conducting research and research evaluation using open sources, who must remain apprised of findings related to limitations of the data they use.

Conflicts of interest

The authors have no conflicts of interest to report.